Notice

April 19, 2022 Winners' reports and implementation source code have been released.

April 7, 2022 The video recording of the awards ceremony is now available on YouTube. You can also view it at the bottom of this page.

March 16, 2022 Winners have been announced. Thank you very much to everyone who submitted the final result set.

February 21, 2022 The dataset has been made available again due to the extended deadline for submissions. The leaderboard for object tracking evaluation for the extended period is available.

February 18, 2022 The deadline for submissions has been extended to Wednesday, March 2, 2022.

February 7, 2022 The video of the online seminar has been released.

February 3, 2022 The Final Submission Application Form has been released.

January 27, 2022 The documents of The 2nd Online seminar has been released.

January 18, 2022 The MOTA threshold was fixed at 0.6.

December 22, 2021 The documents of The RISC-V reference implementation has been updated.

December 17, 2021 We revised the provided board accessories. Provided board includes an AC adapter, and power conversion cable.

December 6, 2021 We are currently accepting applications for participation in the online seminar on The RISC-V reference implementation. Click here to apply!!

December 3, 2021 The RISC-V reference implementation is now available on Google Drive. Also, a modified version of Vivado.zip has been uploaded. If you have already downloaded Vivado.zip, we apologize for the inconvenience, but please download it again.

November 30, 2021 RISC-V reference environment has been released.

With the progress of artificial intelligence (AI) technology, social implementation such as image recognition using AI technology, automatic driving, and natural language processing is rapidly progressing. Especially in the edge computing field, since it is necessary to realize AI technology with higher efficiency, startups mainly in the United States and China and major vendors are accelerating their entry into AI hardware, and even in Japan Not only that, there is an urgent need to develop human resources and industries for hardware to accelerate AI processing such as LSI and FPGA.

Against this background, we will carry out this contest, in addition to the conventional AI technology development centered on software, we are aiming to develop human resources and startups with AI hardware in mind, as well as industry development utilizing these technologies. In this contest, we will develop AI hardware equipped with the "RISC-V" chip, which is currently attracting attention, and set the hardware system development that considers edge computing including hardware and software (network model and system optimization) as an issue.

The 1st AI Edge Contest (Algorithm Contest (1))

The 2nd AI Edge Contest (Implementation Contest (1))

The 3rd AI Edge Contest (Algorithm Contest (2))

The 4th AI Edge Contest (Implementation Contest (2))

*Please refer to the winners' solutions in the past AI edge contest.

Contest Outline

| Subject |

(Algorithm development) Create an algorithm that detects a rectangular area in which objects are captured from the videos taken by a camera forward facing a vehicle and tracks the objects. (Algorithm implementation) Design hardware accelerators and implement algorithm on the target platform equipped with RISC-V. | |

| Provided data | (Train/Test) videos of a vehicle front camera (Train) Rectangular tag area labeled with objects, category and object ID | |

| Identifying target | Car, Pedestrian | |

| Implementation | Using RISC-V in the processing of object tracking(For the details, please refer to the Rules) | |

| Award | Processing speed award | Idea award |

| Platform | Avnet Ultra96-V2 FPGA board< (Zynq UltraScale+ MPSoC ZAU3EG SBVA484) |

Any device |

| Evaluation | Processing speed of the target FPGA board |

RISC-V utilization ideas |

| Winning requirements | The MOTA of the object tracking result of the test data is over 0.6, etc. |

It is not difficult to apply it practically, such as the MOTA of the object tracking result of the test data is much lower than 0.6 (less than half). |

| Final result set | Source code, area report, implementation approach, processing performance, etc. |

Source code, implementation report, video of the operation, etc. |

| Prize. | 1st Prize: The 1st Prize Trophy / 500,000 yen + Google Cloud Platform Coupon (100,000 yen) 2nd Prize: The 2nd Prize Trophy / 250,000 yen + Google Cloud Platform Coupon (50,000 yen) 3rd Prize: The 3rd Prize Trophy / 100,000 yen + Google Cloud Platform Coupon (50,000 yen) |

Idea Award Trophy / 250,000 yen(Up to 3 team) |

| Special prize | Forum Activity Award / Article Writing Award | |

| Amazon Gift Card (10,000 yen)(Up to 3 teams) (Forum Activity Award) Those who have greatly contributed to solving the problem in the forum. (Article Writing Award)Those who wrote articles with high educational effect on the Web on blogs etc. and published links on the forum. | ||

Subject (Algorithm development)

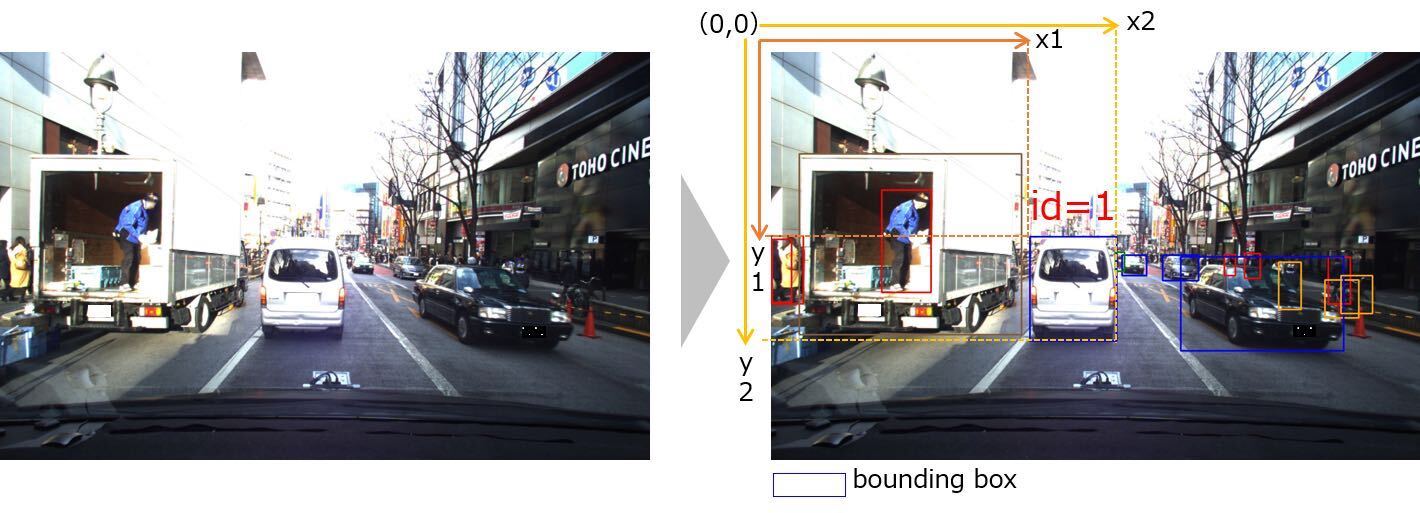

Allocate a rectangular area containing objects to be predicted as a bounding box = (x1, y1, x2, y2) to the video captured by the vehicle front camera, and give any unique object ID to the same object in each video. With allocations of multiple bounding boxes to each frame in the videos, the bounding box is represented by specifying four coordinates with the upper left corner as the origin (0,0), upper left coordinate of the object area (x1, y1), and the lower right coordinate (x2, y2).

However, the object to be evaluated (objects to be inferred) is limited to those that satisfy all of the following.

・There are two categories: “Car” and “Pedestrian”

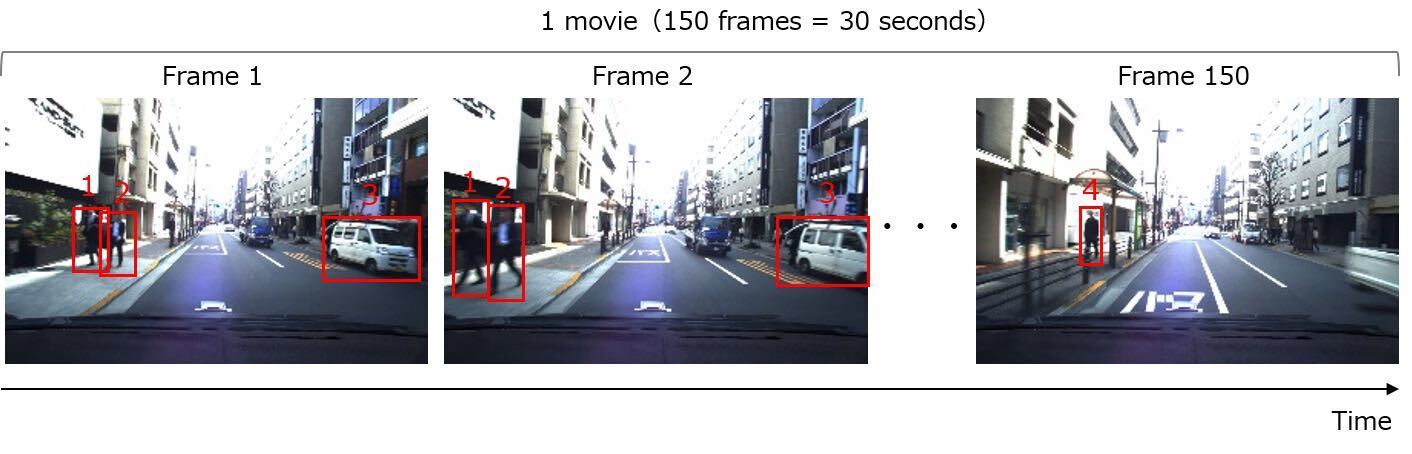

・Objects with 3 or more frames in each video (Frames do not have to be continuous)

・Objects with a rectangle size of 1024 pix² or more

*Even if an object appears in three or more frames in the video, if there are less than 1024 pix² or more in three frames, the object including the size of 1024 pix² or more will not be evaluated.

*Please note that if an object that does not meet the conditions is detected, it is considered as a false detection.

Subject (Algorithm Implementation)

Implement the developed algorithm on the target platform equipped with a RISC-V chip.

The section to be optimized and the processing time to be measured is defined as follows.

Time to perform inference processing on the video data loaded in the memory and then convert it to JSON-equivalent information such as the ID and rectangle information of each object.

Carefully check the RISC-V usage requirements described in the "Rules" tab before implementing.

Data

| For Train | For Test | |

| Route | Tokyo (Shibuya – Akihabara) | |

| Time Zone | Day time | |

| Resolution | 1936 x 1216 | |

| FPS | 5.0 | |

| Category Types | Car, Pedestrian, Truck, Signal, Signs, Bicycle, Motorbike, Bus, Svehicle, Train | Car, Pedestrian |

| Number of Videos | 25 videos | 74 videos |

| Length of an videos | 120 seconds | 30 seconds |

| Number of Frames per video | 600 | 150 |

Travel Route

Flow of entry / registration for the contest

1. Sign up for SIGNATE (Please register through the member registration button on the top right of the page if you are not currently a member.).

2. Download the data from the data tab (Please agree the terms of entry that will be displayed when downloading.).

3. Develop an algorithm and implement / optimize it on the selected target environment

* We plan to provide FPGA board to a maximum of 50 applicants based on the review of application among the people who apply through application form for the board offer document review. (The number of boards offered may increase.)

* If you do not have an Ultra96-V2 board and would like to use it as a target environment, please purchase it yourself or apply by clicking the button below.

Application Form for Board Offer Document Review

4. During the contest, submit the object trakking result from the "Submit" button on the upper right of this page and check the MOTA score on the leaderboard.

* Please refer to the MOTA function that can be downloaded on the data page.

* The MOTA score until the last day of the contest (public score) and the MOTA score after the contest (private score) are the same.

5. (Optional) During the contest, we will submit the provisional processing time ranking as reference information, so please cooperate.

* Please submit the average processing time [ms] per video at the time of posting and the evaluation result by MOTA from the "Submit" button on the page that transitions from the following.

* Submits are accepted at any time until the deadline of 2022/1/15, and can be updated.(The latest results will be displayed)

* In the case of a team, the team leader should submit it as a representative. The user name of the poster is displayed on the interim processing time leaderboard.

Provisional processing time submission form

6. Submit the final result set by the end of the contest.

* For details, refer to "submission_details.pdf" that can be downloaded from the data page.

* Upload on the Internet such as Dropbox, Gigafile, etc., and inform us of the information required for downloading to the following form.

Final Submission Application Form

ONLINE Award Ceremony

The AI Edge Contest is also collaborating with the 3rd Japan Automotive AI Challenge sponsored by the Society of Automotive Engineers of Japan to promote the discovery and training of engineers.

Participation information is being registered. Please wait for a while.

Participation information is being registered. Please wait for a while.